“Hello, I’m here.” Throaty, warm, and incredibly human, the first lines spoken by Samantha, the incorporeal female operating system in Spike Jonze’s 2013 film Her, are a far cry from the mechanical voice recognition technologies that we are used to. In addition to its thought provoking philosophical predictions about the near-future, Jonze’s film also hones in on the ideal of voice-replication technology: an artificially intelligent system so natural and intuitive that we can fall in love with it.

Replicating the intricacies of human characteristics and behaviors in non-sentient technologies is far from a new curiosity. Throughout history, automata creators worked to imbue automata with the ability to pen poems, perform acrobatics, play musical instruments, and bat their eyelashes, and these self-operating  machines were often admired and judged for their ability to replicate human behaviors. In perhaps the most dramatic fictional interaction with an automaton that blurs the lines separating human from machine, protagonist Nathaniel in E.T.A. Hoffmann’s short story “The Sandman” falls in love with automaton Olympia, whom he mistakes for a human female.

machines were often admired and judged for their ability to replicate human behaviors. In perhaps the most dramatic fictional interaction with an automaton that blurs the lines separating human from machine, protagonist Nathaniel in E.T.A. Hoffmann’s short story “The Sandman” falls in love with automaton Olympia, whom he mistakes for a human female.

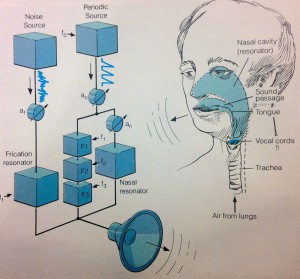

If imitation and replication of human characteristics is one of the driving forces in the creation of artificial beings, it is also one of the greatest challenges. Moving away from automata and toward operating systems and other examples of artificial intelligence, reproduction of human speech is one of the greatest hurdles to clear if we are to produce operating systems like Jonze’s fictional Samantha. The 1981 premiere issue of High Technology affirms that the simulation of human speech has historically been one of the most elusive replication technologies. One article, “Talking Machines Aim For Versatility,”discusses various methods used to replicate speech and reflects upon the value of machines that are capable of producing and understanding human speech.

At the time, most recordings (like the voice on the operator line) consisted of actual recordings of human speech or utilized early word synthesis technologies that tended to sound flat and robotic. Higher-end technologies were very expensive; the Master Specialties  model 1650 synthesizer, for example, was $550 for only a one-word vocabulary, and each additional word cost $50, so the technology was very cost restrictive. Technologies have vastly evolved since the time of rudimentary “talking machines,” but the article discusses the potential of speech synthesis and compression technologies that would streamline the process of stringing phonemes (the smallest speech units) into complete sentences, therefore maximizing speech output, concepts which have influenced current speech synthesis techniques. While there are various approaches to “building” voices, the most common technique in the modern day is concatenative synthesis. A voice actor is recorded reading passages of text, random sentences, and words in a variety of cadences, which are then combined with other recording sequences by a text-to-speech engine to form new words and sentences. The technique vastly expands upon the range and comprehensiveness of operating systems like Apple’s Siri.

model 1650 synthesizer, for example, was $550 for only a one-word vocabulary, and each additional word cost $50, so the technology was very cost restrictive. Technologies have vastly evolved since the time of rudimentary “talking machines,” but the article discusses the potential of speech synthesis and compression technologies that would streamline the process of stringing phonemes (the smallest speech units) into complete sentences, therefore maximizing speech output, concepts which have influenced current speech synthesis techniques. While there are various approaches to “building” voices, the most common technique in the modern day is concatenative synthesis. A voice actor is recorded reading passages of text, random sentences, and words in a variety of cadences, which are then combined with other recording sequences by a text-to-speech engine to form new words and sentences. The technique vastly expands upon the range and comprehensiveness of operating systems like Apple’s Siri.

The High Technology article reflects that because machines would be able to communicate in a form that is natural to humans, they are more equipped to fulfill “the role of mankind’s servants, advisors, and playthings.” Over thirty years later, this goal is still extraordinarily relevant, especially as artificially intelligent systems become more integrated into consumer products like phones, tablets, cars, and security systems. Furthermore, advancements in voice recognition and synthesis technologies have benefitted individuals with impairments who require speech-generating devices to communicate verbally. There are, of course, many challenges that still remain, including the ability of these systems to understand different human accents and dialects. Creating believable artificial speech, however, still harkens back to the greatest challenge in the evolution of these technologies: authenticity. Humans are able to register subtle changes in tone and inflection when we communicate with each other, and these subtleties are currently difficult to replicate in artificially intelligent systems, which explains why we can easily discern a human voice from that of a machine. Jonze’s film suggests that clearing this authenticity hurdle is essential to our ability to truly connect with our technology. Far from the utilitarian “servants, advisors, and playthings” suggested in the High Technology article, intuitive and human-like operating systems could alter our emotional relationship with machines to the extent that they become our confidants and romantic partners.

Intern Giorgina Paiella is an undergraduate student majoring in English and minoring in philosophy and women’s, gender, and sexuality studies. In her new blog series, “Man, Woman, Machine: Gender, Automation, and Created Beings,” she explores treatments of created and automated beings in historical texts and archival materials from Archives and Special Collections.