[slideshow_deploy id=’5552′]

Luis Géigel and his daughter, Bianca Géigel Lonergan in the stacks where part of the Puerto Rican collection is located (06/18/2014).

Last June 18, 2014 I had the pleasure to welcome to the archives two family members of the late Luisa Géigel—the last owner of what it is known as the Puerto Rican Collection, A.K.A. the Geigel Family Collection. Bianca Géigel Lonergan contacted me in June to see if we could arrange a visit to the collection so her father, Luis Géigel, could see the books her cousin (*), Luisa Geigel de Gandia sold to the archives. For the visit I gathered a selection of the books published by several members of the Géigel family in the collection as a way to reflect on the importance of this family in the development of Puerto Rico as a modern nation since the late 19th century.

As I mentioned in previous blog postings about this collection (2010 and 2012), the Archives and the Special Collections department, with the help of a former UConn History professor Francisco Scarano, acquired this collection in 1982 through several grants and financial supports from the Research Foundation, the College of Liberal Arts and Sciences, the Center of Latin American Studies, the Class of ’26, and the University of Connecticut Foundation, from Luisa Géigel de Gandia. Luisa Géigel de Gandia was an important artist in Puerto Rico especially in the 1940s. She was the first Puerto Rican female sculptor. She also was a painter and was the first women artist to exhibit several nude figure studies in Puerto Rico. She was a co-founder, together with Nilita Vientos Gastón, of the Arts Division at the Ateneo Puertorriqueño. From 1958-1986 she taught Sculpture, Drawing and Artistic Anatomy at the University of Puerto Rico, Río Piedras Campus. She was also a published author.

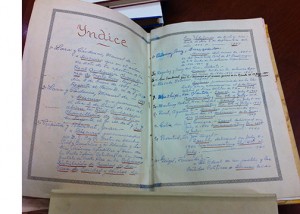

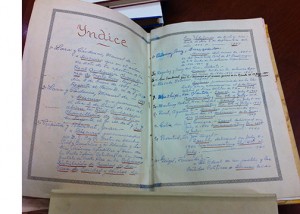

Index and inventory created by Luisa Géigel of the titles and location of all the books in the Géigel collection in her home in San Juan, PR

Together with her father and grandfather, she maintained, expanded and inventoried her family’s books and serials collection. As Dr. Scarano aptly described it, “this magnificent research collection, painstakingly nurtured by the Géigel family of San Juan for three generations, constitutes a bibliographic resource of national scholarly significance” (1).

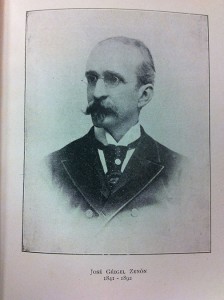

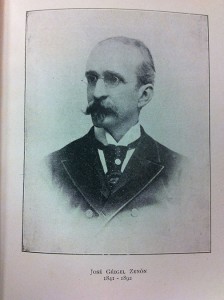

José Geigel y Zenón (1841-1892)

From rare literature gems from the 19th century and 20th century to agricultural and political treatises, this collection serves as a snapshot of the different cultural, political, scientific, and economic movements experimented in Puerto Rico in the past two centuries. The collection also reflected the various interests that drove the family members, José Géigel y Zenón (1841-1892), Fernando Géigel y Sabat (1881-1981), y Luisa Géigel de Gandia (1916-2008) to amassed this collection. Other individuals that donated materials to the original collection was Ramón Gandia Córdova, Luisa Géigel’s father-in-law who donated a good portion of the agricultural books found in this collection. There are other members of the Géigel family represented in the collection such as Vicente Géigel y Polanco (politician and former president of the Ateneo Puertorriqueño), A.D. Géigel (a translator of foreign novels during the 19th century), and Luis M. Géigel (agronomist and father and grandfather of our visitors).

The Geigel family members were great contributors to the cultural and political life of Puerto Rico and their work reflected their deep love and concerns about the past, present and future of Puerto Rico. José Géigel y Zenón, known as Pepe by his contemporaries, was part of the intellectual elite in 19th century Puerto Rico and was friend and/or relative to many important cultural figures such as Alejandro Tapia y Rivera and Manuel Zeno Gandia—who signed and dedicated their books to their dear friend Pepe. In term of cultural contributions, José Géigel y Zenón, together with Abelardo Morales Ferrer wrote one of the most definite Puerto Rican bibliography of their time titled, Bibliografía Puertorriqueña 1492-1894 which was produced between 1892 -1894. Later on his son, Fernando Géigel y Sabat published the first edition of this work in 1934. In addition, Fernando published a compilation of his father satirical writing that he published in different 19th century newspapers such as El Progreso, Don Simplicio, El Derecho, y La Azucena, titled, Artículos político-humoristico y literarios por Jose Géigel y Zenón (1936).

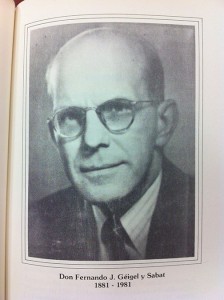

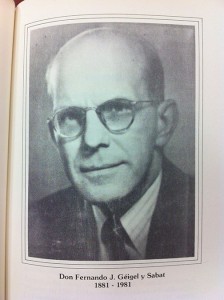

Fernando Géigel y Sabat (1881-1981)

Fernando Géigel y Sabat was also an important member of the family. A lawyer by training, he was a Manager of the City of San Juan (1939–1941) and published author. He authored several books which range from political topics such as El ideal de un pueblo y los partidos politicos (1940) to historical treatise, Balduino Enrico (1934), and Corsarios y piratas de Puerto Rico 1819-1825 (1946)—inspired in part by Alejandro Tapia y Rivera novel, Cofresí, which Tapia dedicated to Fernando’s father. Also present in the collection are several important titles from Vicente Géigel y Polanco. A politician, reporter, essayist, ateneísta, he was a pivotal figure in Puerto Rico during the mid-20th century. The collection has several of his books such as El problema universitario, on the role of the university in Puerto Rican culture, La independencia de Puerto Rico about independence as a political option for Puerto Rico, and his memoir about his work at the Ateneo Puertorriqueño, Mis recuerdos del Ateneo.

There are two books from Luisa Géigel in the collection, La genealogía y el apellido de Campeche and El paquete rojo o informe sobre la extinción de la moneda Macuquina. Luis M. Géigel’s work at the Estación Experiemental in Puerto Rico is also present with the title, El algodón “sea-island” en Puerto-Rico which is available at the Internet Archives. I have compiled a list with the books published by the Geigel family in the collection for your enjoyment.

Marisol Ramos, Curator, with Luis Géigel at the stacks

This visit by Bianca and Luis Géigel was quite a walk into memory lane. It helped me to contextualize this collection as part of a bigger project of imaging Puerto Rico as part of a broader cosmopolitan project that connected Puerto Rico with its past, present and possible futures. The vision of the Géigel family for Puerto Rico was multifaceted and its collection represented that diversity of thoughts, history, politics and cultural projects experimented during the 19th and 20th century. Walking with Luis and Bianca into the stacks at the Archives and Special Collections to see the books up close and personal, was like embarking in a time-traveling adventure similar to the ones imaged by the Alejandro Tapia y Rivera in one his stories; a type of magic only find in the archives…

Note: (*): Luis Géigel is Luisa’s first cousin once removed.

References:

Scarano, Francisco A. “The Géigel Puerto Rican Collection”. Harvest. The University of Connecticut Library, Fall 1982: 1-2.

Biographical Data for Luisa Géigel available at these sites:

Marisol Ramos, Curator for Latina/o, Latin American and Caribbean Collections

the same kind of thing but the record was interesting. John has never forgiven me for my note, and even if I’m not sure if we ever would really have become friends I have always been angry at myself for my insensitivity. John sent out a few copies of the record, which he had pressed for himself on his own Takoma label, and sold more through mail orders.

the same kind of thing but the record was interesting. John has never forgiven me for my note, and even if I’m not sure if we ever would really have become friends I have always been angry at myself for my insensitivity. John sent out a few copies of the record, which he had pressed for himself on his own Takoma label, and sold more through mail orders.